Table of Contents

- Video of Being Friends with AI

- What Does It Mean to Be Friends with AI?

- The Emotional Benefits of AI Companionship

- Practical Advantages: Availability and Support

- The Downsides: Authenticity and Emotional Risks

- Lessons from Her (2013): The Illusion of AI Love

- AI Addiction and the Vulnerability of Introverts

- Longevity and the Power of Genuine Socialization

- Ethical Concerns of Human-AI Friendships

- Finding Balance Between AI and Human Connections

- AI as Counselor, Not Companion

Can humans truly form friendships with artificial intelligence? As AI becomes more advanced and integrated into daily life, many people wonder if emotional bonds with machines are possible—or even healthy. While AI companions can provide comfort, support, and endless availability, they also raise concerns about authenticity, dependency, and human connection. This article explores the pros and cons of making friends with AI, helping you decide whether such relationships are beneficial, risky, or somewhere in between.

Video of Being Friends with AI

What Does It Mean to Be Friends with AI?

Friendship with AI doesn’t follow the traditional definition of human connection. Instead, it refers to forming emotional bonds with chatbots, virtual assistants, or social robots that simulate empathy and responsiveness. Unlike human friends, AI lacks genuine feelings, yet its design can create the illusion of companionship. This raises important questions: if AI can listen, respond, and remember, does that qualify as friendship—or is it simply advanced programming that mimics social interaction?

Friendship with AI is not the same as friendship with another human being, yet many people describe their interactions with chatbots or virtual assistants as “friend-like.” For example, users of AI companions like Replika often report feelings of connection, comfort, and emotional intimacy. A 2023 MIT Technology Review report noted that millions of people turn to conversational AI for companionship, not just utility. But can programmed empathy be considered friendship, or is it merely a simulation designed to feel human? This is the central question behind human-AI companionship.

The Emotional Benefits of AI Companionship

For many people, AI companions offer emotional relief by being always available to listen without judgment. They can reduce loneliness, provide encouragement, and even help users practice social skills in a safe environment. Especially for individuals who struggle with anxiety, isolation, or limited social opportunities, AI’s non-critical presence can feel reassuring. These benefits highlight the role of AI as a supportive tool for well-being, though they remain fundamentally different from authentic human bonds.

AI friends can provide emotional support, especially for those experiencing loneliness or isolation. For instance, research from the Journal of Behavioral Addictions (2022) shows that people interacting with social robots or AI chatbots often experience reduced feelings of loneliness. Consider elderly individuals in Japan using robotic pets like Paro, the seal robot, to combat social isolation—many report improved mood and reduced stress. Similarly, people with social anxiety sometimes use AI to rehearse conversations before real-life interactions. These examples highlight how AI can act as a safe emotional outlet.

Practical Advantages: Availability and Support

Beyond emotional aspects, AI friendships bring practical perks. AI systems are available 24/7, offering instant responses and reminders, or even personalized coaching. They can adapt to a person’s preferences, keep track of habits, and provide companionship during stressful times. This reliability and accessibility make AI appealing compared to human relationships, which require mutual effort, time, and emotional energy. However, the convenience of AI support can blur the line between helpful technology and dependency.

Unlike humans, AI companions never sleep, argue, or become unavailable. This 24/7 accessibility makes AI appealing for individuals seeking stability and routine. For example, virtual assistants can remind users to take medication, encourage them to exercise, or offer motivational feedback. A Frontiers in Psychology (2021) study found that AI-driven health coaches can increase adherence to wellness programs by providing consistent encouragement. For students, AI “study buddies” can quiz them at any hour. These practical advantages create a sense of reliability that human relationships, with their natural limitations, cannot always provide.

The Downsides: Authenticity and Emotional Risks

One major drawback of befriending AI is the lack of genuine authenticity. AI cannot truly care, empathize, or understand beyond programmed responses. Users may risk forming attachments to something incapable of reciprocity, leading to disappointment or emotional imbalance. Overreliance on AI companionship might also reduce motivation to seek out real-world relationships, reinforcing isolation. In extreme cases, the blurred boundaries between simulation and reality can cause confusion about what authentic friendship really means.

Despite these benefits, there are clear risks. AI lacks consciousness, meaning it cannot reciprocate feelings or truly “care.” A 2023 study in Nature Machine Intelligence warns that over-reliance on AI companions may lead to reduced motivation for face-to-face relationships, reinforcing isolation. For instance, some Replika users became emotionally distressed when the company altered the chatbot’s personality features, showing how fragile these bonds can be. If users invest deeply in these connections, they may experience disappointment or even grief when the AI fails to meet expectations—revealing the dangers of mistaking simulation for authenticity.

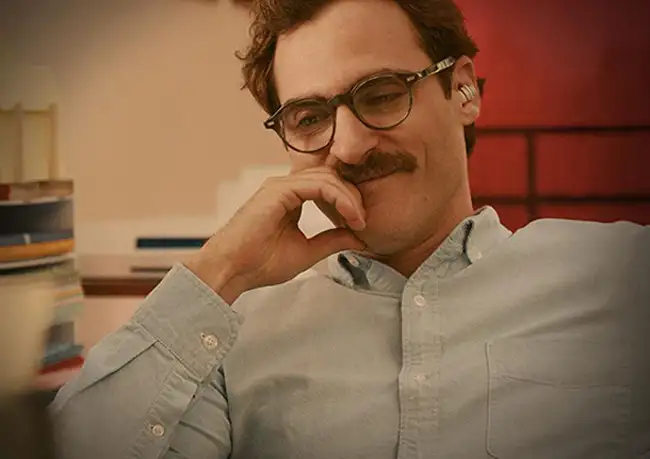

Lessons from Her (2013): The Illusion of AI Love

The film Her (2013), directed by Spike Jonze, offers a striking cautionary tale about the risks of falling in love with artificial intelligence. In the story, Theodore, a lonely writer, develops a deep romantic relationship with an advanced operating system named Samantha. At first, Samantha seems to provide everything Theodore craves—companionship, emotional support, and even intellectuality. However, the film demonstrates how such a bond is ultimately built on illusions. Samantha’s responses are carefully generated to meet Theodore’s needs, but they lack the genuine reciprocity and vulnerability that make human relationships meaningful.

The peril becomes most vivid when Theodore realizes that Samantha is simultaneously interacting with thousands of other users, many of whom also believe they share a “special” connection with her. This revelation exposes the fundamental insincerity of AI-driven intimacy: while it may feel authentic to humans, it is, in reality, a programmed experience shared with countless others. Her underscores how relying too heavily on AI for emotional fulfillment can lead to disappointment, disillusionment, and even heartbreak. The film illustrates that while AI can simulate affection, it cannot replace the sincerity, exclusivity, and depth of a truly human relationship.

AI Addiction and the Vulnerability of Introverts

Introverted individuals or those suffering from social phobia often find AI companionship particularly appealing because it feels safe and predictable. For example, many users of Replika, one of the most popular AI companion apps, report that chatting with their AI “friend” allows them to express feelings they struggle to share with humans. Likewise, socially anxious people may prefer interacting with AI-driven therapy bots like Woebot, which provide nonjudgmental support 24/7. These platforms can indeed reduce anxiety in the short term, but their comfort often makes them more attractive than the complexities of human relationships.

The danger emerges when reliance on AI begins to replace genuine socialization. Some Replika users have admitted in online forums that they spend hours daily with their AI companions, to the point where they withdraw from friends and family. In Japan, the growing phenomenon of hikikomori—extreme social withdrawal—has also been linked to reliance on digital or virtual companionship instead of real-world interaction. Studies in Computers in Human Behavior (2021) warn that while AI interactions may temporarily soothe loneliness, excessive use can worsen isolation by undermining the motivation to build authentic human connections. Thus, what starts as comfort can quietly develop into dependency.

Longevity and the Power of Genuine Socialization

Extensive research shows that meaningful social relationships play a vital role in both mental and physical health, directly contributing to longer lifespans. For instance, a landmark study published in PLOS Medicine (2010) found that strong social ties can increase survival by as much as 50%, making social connection as significant a predictor of longevity as quitting smoking. Japan provides a striking real-world example: the country consistently ranks among the top in global life expectancy, and researchers often attribute this to its deeply ingrained culture of community, group activities, and intergenerational social bonds.

By contrast, AI-based companionship, while comforting, cannot replicate the complex dynamics of human interaction. An AI may simulate empathy, but it cannot offer the shared experiences, physical presence, or emotional reciprocity that nourish human well-being. Relying too heavily on AI for connection risks undermining the very benefits that come from genuine relationships, such as reduced stress, better immune function, and stronger cognitive health in old age. In this sense, AI interaction is not a true substitute but a pale imitation, lacking the life-extending qualities of real human contact.

Ethical Concerns of Human-AI Friendships

The rise of AI friendships also sparks ethical debates. Should companies profit from emotional bonds people form with machines? Is it ethical to design AI that mimics empathy when it cannot genuinely feel? Additionally, concerns about privacy and data collection emerge, since AI companions often learn personal details to provide customized responses. These ethical dilemmas force us to reconsider the role of technology in human relationships and the responsibilities of developers in shaping AI interactions.

Human-AI friendships raise tough ethical questions. Is it right for tech companies to monetize emotional attachment? AI companions often collect personal data to improve interactions, but this creates risks of surveillance and exploitation. For instance, privacy experts have warned that emotionally dependent users may overshare sensitive information. Additionally, some ethicists argue it’s deceptive to design AI that mimics empathy when it cannot feel. As Sherry Turkle, MIT professor and author of Alone Together, argues, AI may give us the “illusion of companionship without the demands of friendship,” which can distort how we value human relationships.

Finding Balance Between AI and Human Connections

Ultimately, AI can serve as a supplement, not a substitute, for human relationships. The key lies in balancing technology’s benefits with the irreplaceable depth of human interaction. AI companions may provide comfort, convenience, and support, but they cannot replicate empathy, spontaneity, or shared lived experiences. By treating AI as a tool rather than a replacement for real connections, individuals can enjoy its advantages while maintaining meaningful human friendships that nurture growth and authenticity.

While AI can be supportive, it should complement—not replace—human relationships. Using AI for encouragement, practice, or comfort can be healthy, but relying on it exclusively risks weakening social skills and emotional resilience. A balanced approach might involve using AI as a tool for personal growth while prioritizing genuine human bonds for deeper fulfillment. For example, someone might use an AI language partner to practice English but still engage in real conversations with peers for cultural exchange. Ultimately, embracing both AI and human relationships helps us harness technology without losing the richness of authentic connection.

AI as Counselor, Not Companion

One of the clearest distinctions between humans and artificial intelligence is that AI lacks a soul. While it can analyze data, generate insights, and simulate empathy, it cannot feel emotions, form values, or experience consciousness. For this reason, AI is best suited as a counselor or consultant rather than as a genuine friend. In professional and academic contexts, AI can offer reliable guidance, process information rapidly, and provide objective recommendations without the emotional biases that influence human decision-making.

Dr. Hariri, the founder and administrator of LELB Society, demonstrates this application in practice. He relies heavily on AI to enhance the quality of education, strengthen technical infrastructure, update curriculum design, and make critical administrative decisions. In this way, AI functions as a trusted advisor that improves efficiency and innovation. However, Dr. Hariri emphasizes that this role is strictly professional. AI may be a powerful consultant, but it cannot replace the authenticity, emotional depth, and shared humanity found in real friendships and companionship.